Blog Post | Science & Technology

Centers of Progress, Pt. 40: San Francisco (Digital Revolution)

The innovations developed in San Francisco have transformed how we work, communicate, and learn.

Today marks the 40th installment in a series of articles by HumanProgress.org called Centers of Progress. Where does progress happen? The story of civilization is in many ways the story of the city. It is the city that has helped to create and define the modern world. This biweekly column will give a short overview of urban centers that were the sites of pivotal advances in culture, economics, politics, technology, etc.

Our 40th Center of Progress is San Francisco during the digital revolution, when entrepreneurs founded several major technology companies in the area. The southern portion of the broader San Francisco Bay Area earned the metonym “Silicon Valley” because of the high-technology hub’s association with the silicon transistor, used in all modern microprocessors. A microprocessor is the central unit or engine of a computer system, fabricated on a single chip.

Humanity has long strived to develop tools to improve our survival odds and make our lives easier, more productive, and more enjoyable. In the long history of inventions that have made a difference in the average person’s daily life, digital technology, with its innumerable applications, stands out as one of the most significant innovations of the modern age.

Today the San Francisco Bay Area remains best known for its association with the technology sector. With its iconic Victorian houses, sharply sloping hills, trolleys, fog, Chinatown (which bills itself as the oldest and largest one outside Asia), and of course the Golden Gate Bridge, the city of San Francisco is famous for its distinctive views. As Encyclopedia Britannica notes, “San Francisco holds a secure place in the United States’ romantic dream of itself—a cool, elegant, handsome, worldly seaport whose steep streets offer breathtaking views of one of the world’s greatest bays.” Attempts to preserve the city’s appearance have contributed to tight restrictions on new construction. Perhaps relatedly, the city is one of the most expensive in the United States and suffers from a housing affordability crisis. San Francisco has in recent years struggled with widespread homelessness and related drug overdose deaths and crime. With both the country’s highest concentration of billionaires, thanks to the digital technology industry, and the ubiquitous presence of unhoused people, San Francisco is a city of extremes.

Today’s densely populated metropolis was once a landscape of sand dunes. In 1769, the first documented sighting of the San Francisco Bay was recorded by a scouting party led by the Spanish explorer Gaspar de Portolá (1716–1786). In 1776, European settlement of the area began, led by the Spanish missionary Francisco Palóu (1723–1789) and expeditionary José Joaquín Moraga (1745–1785). The latter is the namesake of San José, a city on the southern shore of San Francisco Bay, about 50 miles from San Francisco but located within the San Francisco Bay Area and the San Jose-San Francisco-Oakland Combined Statistical Area. San Francisco was the northernmost outpost of the Spanish Empire in North America and later the northernmost settlement in Mexico after that country’s independence. But the city remained relatively small and unknown.

In 1846, during the Mexican-American War, the United States captured the San Francisco area, although Mexico did not formally cede California until the 1848 Treaty of Guadalupe Hidalgo. At that time, San Francisco only had about 900 residents. That number grew rapidly during the California Gold Rush (1848–1855), when the discovery of gold turned the quiet village into a bustling boomtown of tens of thousands by the end of the period. Development of the city’s port led to further growth and helped the area become a hub in the early radio and telegraph industries, foreshadowing the city’s role as a leader in technology.

In 1906, three-quarters of the city was destroyed in a devastating earthquake and related fire caused by a gas line rupturing in the quake. The city rebuilt from the destruction and continued its growth, along with the broader Bay Area. In 1909, San Jose became the home of one of the first radio stations in the country. In the 1930s, the Golden Gate Bridge became a part of San Francisco’s skyline, and the city’s storied Alcatraz maximum security prison opened, holding famous prisoners such as the Prohibition-era gangster Al Capone (1899–1947). In 1939, in Palo Alto, just over 30 miles south of San Francisco, William Hewlett (1913–2001) and David Packard (1912–1996) founded a company that made oscilloscopes – laboratory instruments that display electronic signals as waves. They named the company Hewlett-Packard. During World War II, the company shifted to making radar and artillery technology. That field soon became linked to computing. That is because researchers at the University of Pennsylvania created a new tool to calculate artillery firing tables, among other tasks: the first general-use digital computer.

“Computer” was once a job title for a person who performed calculations. The first machine computer, named Electronic Numerical Integrator and Computer, or ENIAC, debuted in 1945. It cost about $500,000, or nearly $8 million in 2022 dollars, measured 8 feet tall and 80 feet long, weighed 30 tons, and needed constant maintenance to replace its fragile vacuum tubes. Back when computers were the size of a room and required many people to operate them, they also had about 13 times less power than a modern pocket-sized smartphone that costs about 17,000 times less.

San Francisco and Silicon Valley’s greatest claim to fame came with the dawn of more convenient and powerful digital technology. In 1956, the inventor William Shockley (1910–1989) moved from the East Coast to Mountain View, a city on the San Francisco Bay located about 40 miles south of San Francisco, to live closer to his ailing mother. She still lived in his childhood home of Palo Alto. That year he won the Nobel Prize in physics along with engineer John Bardeen (1908–1991) and physicist Walter Houser Brattain (1902–1987). The prize honored them for coinventing the first working semiconductor almost a decade earlier, in 1947, at Bell Laboratories in New Jersey.

After moving to California, Shockley founded Shockley Semiconductor Laboratory, the first company to make transistors and computer processors out of silicon—earlier versions used germanium, which cannot handle high temperatures. His work provided the basis for many further electronic developments. Also in 1956, IBM’s San Jose labs invented the hard-disk drive. That same year, Harry Huskey (1916–2017), a professor at the University of California, Berkeley, some 14 miles from San Francisco, designed Bendix’s first digital computer, or the G-15.

Shockley had an abrasive personality and later became a controversial figure due to his vocal fringe views related to eugenics and mass sterilization. In 1957, eight of Shockley’s employees left over disagreements with Shockley to start their own enterprise together with investor Sherman Fairchild (1896–1971). They named it Fairchild Semiconductors. Shockley called them “the Traitorous Eight.” In the 1960s, Fairchild Semiconductors made many of the computer components for the Apollo space program directed from Houston, our previous Center of Progress. In 1968, two of the “Traitorous Eight,” Gordon Moore (b. 1929) and Robert Noyce (1927–1990), the latter of whom earned the nickname “the Mayor of Silicon Valley,” left Fairchild to start a new company in Santa Clara, about 50 miles southeast of San Francisco. They named it Intel. Moore remains well-known as the creator of Moore’s Law. It was he who predicted in 1965 that the processing power of computers would double every 18 months.

In 1969, the Stanford Research Institute at Stanford University, some 35 miles southeast of San Francisco, became one of the four “nodes” of Advanced Research Projects Agency Network. ARPANET was a research project that would one day become the internet. In 1970, Xerox opened the PARC laboratory in Palo Alto, which would go on to invent ethernet computing and graphic user interfaces. In 1971, journalist Don Hoefler (1922–1986) published a three-part report on the burgeoning computer industry in the southern San Francisco Bay Area that popularized the term “Silicon Valley.” The pace of technological change picked up with the invention of microprocessors that same year.

Just as the 19th-century Gold Rush once attracted fortune seekers, the promise of potential profit and the excitement of new possibilities offered by digital technology drew entrepreneurs and researchers to the San Francisco Bay Area. In the 1970s, companies such as Atari, Apple, and Oracle were all founded in the area. By the 1980s, the San Francisco Bay Area was the undisputed capital of digital technology. (Some consider the years from 1985 to 2000 to constitute the golden era of Silicon Valley, when legendary entrepreneurs such as Steve Jobs (1955–2011) were active there.) San Francisco suffered another devastating earthquake in 1989, but that was accompanied by a relatively small death toll. In the 1990s, companies founded in the San Francisco Bay Area included eBay, Yahoo!, PayPal, and Google. The following decade, Facebook and Tesla joined them. As these companies created value for their customers and achieved commercial success, fortunes were made, and the San Francisco Bay Area grew wealthier. That was particularly true of San Francisco.

While many of the important events of the digital revolution took place across a range of cities in the San Francisco Bay Area, San Francisco itself was also home to the founding of several significant technology companies. Between 1995 and 2015, major companies founded in or relocated to San Francisco included Airbnb, Craigslist, Coinbase, DocuSign, DoorDash, Dropbox, Eventbrite, Fitbit, Flickr, GitHub, Grammarly, Instacart, Instagram, Lyft, Niantic, OpenTable, Pinterest, Reddit, Salesforce, Slack, TaskRabbit, Twitter, Uber, WordPress, and Yelp.

San Francisco helped create social media and the so-called sharing economy that offers many workers increased flexibility. By streamlining the process of such things as grocery deliveries, restaurant reservations, vacation home rentals, ride-hailing services, second-hand sales, cryptocurrency purchases, and work group chats, enterprises based in San Francisco have made countless transactions and interactions far more convenient.

New technologies often present challenges as well as benefits, and the innovations of San Francisco along with Silicon Valley are certainly no exception. Concerns about data privacy, cyberbullying, social media addiction, and challenges related to content moderation of online speech are just some of the issues attracting debate today that relate to digital technology. But there is no going back to a world without computers, and most would agree that the immense gains from digital technology outweigh the various dilemmas posed by it.

Practically everyone with access to a computer or smartphone has direct experience benefiting from the products of several San Francisco companies, and the broader San Francisco Bay Area played a role in the very creation of the internet and modern computers. It is difficult to summarize all the ways that computers, tablets, and smartphones have forever changed how humanity works, communicates, learns, seeks entertainment, and more. There is little doubt that San Francisco has been one of the most innovative and enterprising cities on Earth, helping to define the rise of the digital age that transformed the world. For these reasons, San Francisco is our 40th Center of Progress.

Today marks the 49th installment in a series of articles by HumanProgress.org titled Heroes of Progress. This bi-weekly column provides a short introduction to heroes who have made an extraordinary contribution to the well-being of humanity. You can find the 48th part of this series here.

This week, our heroes are Charles Babbage and Ada Lovelace—two 19th century English mathematicians and pioneers of early computing. Babbage is often called “The Father of Computing” for conceiving the first automatic digital computer. Building on Babbage’s work, Lovelace was the first person to recognize that computers could have applications beyond pure calculation. She has been dubbed the “first computer programmer” for creating the first algorithm for Babbage’s machine. Babbage and Lovelace’s work laid the groundwork for modern-day computers. Without their contributions, much of the technology we have today would likely not exist.

Charles Babbage was born on December 26, 1791, in London, England. His father was a successful banker and Babbage grew up in affluence. As a child, Babbage attended several of England’s top private schools. His father ensured that Babbage had many tutors to assist with the latter’s education. As a teenager, Babbage joined the Holmwood Academy in Middlesex, England. The academy’s large library helped Babbage develop a passion for mathematics. In 1810, Babbage began studying mathematics at the University of Cambridge.

Before arriving at Cambridge, Babbage had already learned much of contemporary mathematics and was disappointed by the level of mathematics being taught at the university. In 1812, Babbage and several friends created the “Analytical Society,” which aimed to introduce new developments in mathematics that were occurring elsewhere in Europe to England.

Babbage’s reputation as a mathematical genius quickly developed. By 1815, he had left Cambridge and begun lecturing on astronomy at the Royal Institution. The following year, he was elected as a Fellow of the Royal Society. Despite several successful lectures at the Royal Institution, Babbage struggled to find a full-time position at a university. Throughout early adulthood, therefore, he had to rely on financial support from his father. In 1820, Babbage was instrumental in creating the Royal Astronomical Society, which aimed to reduce astronomical calculations into a more standard form.

In the early 19th century, mathematical tables (lists of numbers showing the results of calculations) were central to engineering, astronomy, navigation, and science. However, at the time, all the calculations in the mathematical tables were done by humans and mistakes were commonplace. Given this problem, Babbage wondered if he could create a machine to mechanize the calculation process.

In 1822, in a paper to the Royal Astronomical Society, Babbage outlined his idea for creating a machine that could automatically calculate the values needed in astronomical and mathematical tables. The following year, Babbage was successful in obtaining a government grant to build a machine that would be able to automatically calculate a series of values of up to twenty decimal places, dubbed the “Difference Engine.”

In 1828, Babbage became the Lucasian Professor of Mathematics at Cambridge University. He was largely inattentive to his teaching responsibilities and spent most of his time writing papers and working on the Difference Engine. In 1832, Babbage and his engineer Joseph Clement produced a small working model of the Difference Engine. The following year, plans to build a larger, full scale engine were scrapped, when Babbage began to turn his attention to another project.

In the mid-1830s, Babbage started to develop plans for what he called the “Analytical Engine,” which would become the forerunner to the modern digital computer. Whereas the Difference Engine was designed for mechanized arithmetic (essentially an early calculator only capable of addition), the Analytical Engine would be able to perform any arithmetical operation by inputting instructions from punched cards—a stiff bit of paper that can contain data through the presence or absence of holes in a predefined position. The punched cards would be able to deliver instructions to the mechanical calculator as well as store the results of the computer’s calculations.

Like modern computers, the design of the Analytical Engine had both the data and program memory separated. The control unit could make conditional jumps, separate input and output units, and its general operation was instruction-based. Babbage initially envisioned the Analytical Engine as having applications only relating to pure calculation. That soon changed thanks to the work of Ada Lovelace.

Augusta Ada King, Countess of Lovelace (née Byron) was born on December 10, 1815, in London, England. Lovelace was the only legitimate child of the poet and Member of the House of Lords, Lord Byron and the mathematician Anne Isabella Byron. However, just a month after her birth, Byron separated from Lovelace’s mother and left England. Eight years later he died from disease while fighting on the Greek side during the Greek War of Independence.

Throughout her early life, Lovelace’s mother raised Ada on a strict regimen of science, logic, and mathematics. Although frequently ill and, aged fourteen, bedridden for nearly a year, Lovelace was fascinated by machines. As a child, she would often design fanciful boats and flying machines.

As a teenager, Lovelace honed her mathematical skills and quickly became acquainted with many of the top intellectuals of the day. In 1833, Lovelace’s tutor, Mary Somerville, introduced the former to Charles Babbage. The pair quickly became friends. Lovelace was fascinated with Babbage’s plans for the Analytical Engine and Babbage was so impressed with Lovelace’s mathematical ability that he once described her as “The Enchantress of Numbers.”

In 1840, Babbage visited the University of Turin to give a seminar on his Analytical Engine. Luigi Menabrea, an Italian engineer and future Prime Minister of Italy, attended Babbage’s seminar and transcribed it into French. In 1842, Lovelace spent nine months translating Menabrea’s article into English. She added her own detailed notes that ended up being three times longer than the original article.

Published in 1843, Lovelace’s notes described the differences between the Analytical Engine and previous calculating machines—mainly the former’s ability to be programmed to solve any mathematical problem. Lovelace’s notes also included a new algorithm for calculating a sequence of Bernoulli numbers (a sequence of rational numbers that are common in number theory). Given that Lovelace’s algorithm was the first to be created specifically for use on a computer, Lovelace thus became the world’s first computer programmer.

Whereas Babbage designed the Analytical Engine for purely mathematical purposes, Lovelace was the first person to see potential use of computers that went far beyond number-crunching. Lovelace realized that the numbers within the computer could be used to represent other entities, such as letters or musical notes. Consequently, she also prefigured many of the concepts associated with modern computers, including software and subroutines.

The Analytical Engine was never actually built in Babbage’s or Lovelace’s lifetime. However, the lack of construction was due to funding problems and personality clashes between Babbage and potential donors, rather than because of any design flaws.

Throughout the remainder of his life, Babbage dabbled in many different fields. Several times, he attempted to become a Member of Parliament. He wrote several books, including one on political economy that explored the commercial advantages of the division of labor. He was fundamental in establishing the English postal system. He also invented an early type of speedometer and the locomotive cowcatcher (i.e., the metal frame that attached to the front of trains to clear the track of obstacles). On October 18, 1871, Babbage died at his home in London. He was 79 years old.

After her work translating Babbage’s lecture, Lovelace began working on several different projects, including one that involved creating a mathematical model for how the brain creates thoughts and nerves—although she never achieved that objective. On November 27, 1852, Lovelace died from uterine cancer. She was just 36 years old.

During his lifetime, Babbage declined both a knighthood and a baronetcy. In 1824, he received the Gold Medal from the Royal Astronomical Society “for his invention of an engine for calculating mathematical and astronomical tables.” Since their deaths, many buildings, schools, university departments and awards have been named in Babbage’s and Lovelace’s honor.

Thanks to the work of Babbage and Lovelace, the field of computation was changed forever. Without Babbage’s work, the world’s first automatic digital computer wouldn’t have been conceived when it was. Likewise, many of the main elements that modern computers use today would likely have not been developed until much later. Without Lovelace, it may have taken humanity much longer to realize that computers could be used for more than just mathematical calculations. Together, Babbage and Lovelace laid the groundwork for modern-day computing, which is used by billions of people across the world and underpins much of our progress today. For these reasons, Charles Babbage and Ada Lovelace are our 49th Heroes of Progress.

Blog Post | Science & Technology

Computers Allow Us to Accomplish More with Less

Researchers have just developed a way to fit yet more transistors into less space, creating an even more efficient computer chip.

Researchers have just developed a way to fit yet more transistors into less space, creating an even more efficient computer chip. The breakthrough is good news for “Moore’s Law,” or the idea that the number of transistors per square inch of an integrated circuit board will double every two years.

Computers have come a long way since the days of ENIAC. The first computer was a $6-million-dollar giant that stretched eight feet tall and 80 feet long, weighed 30 tons, and needed frequent downtime to replace failing vacuum tubes. A modern smartphone, in contrast, possesses about 13 hundred times the power of ENIAC and can fit in your pocket. It also costs about 17 thousand times less. (With a deal like that, no wonder that there are now more mobile phone subscriptions than there are people on the planet).

The drop-off in the price of computing power is so steep that it’s difficult to comprehend. A megabyte of computer memory cost 400 million dollars in 1957. That’s a hefty price tag, even before taking inflation into account. In 2013 dollars, that would be 2.6 billion. In 2015, a megabyte of memory cost about one cent.

The cost of both RAM (roughly analogous to short-term memory) and hard drive storage (long-term memory) has plummeted. Consider the progress just since 1980. In that time, the cost of a gigabyte of RAM fell from over 6 million dollars to less than five dollars; a gigabyte of hard drive storage fell from over 400 thousand dollars to three cents.

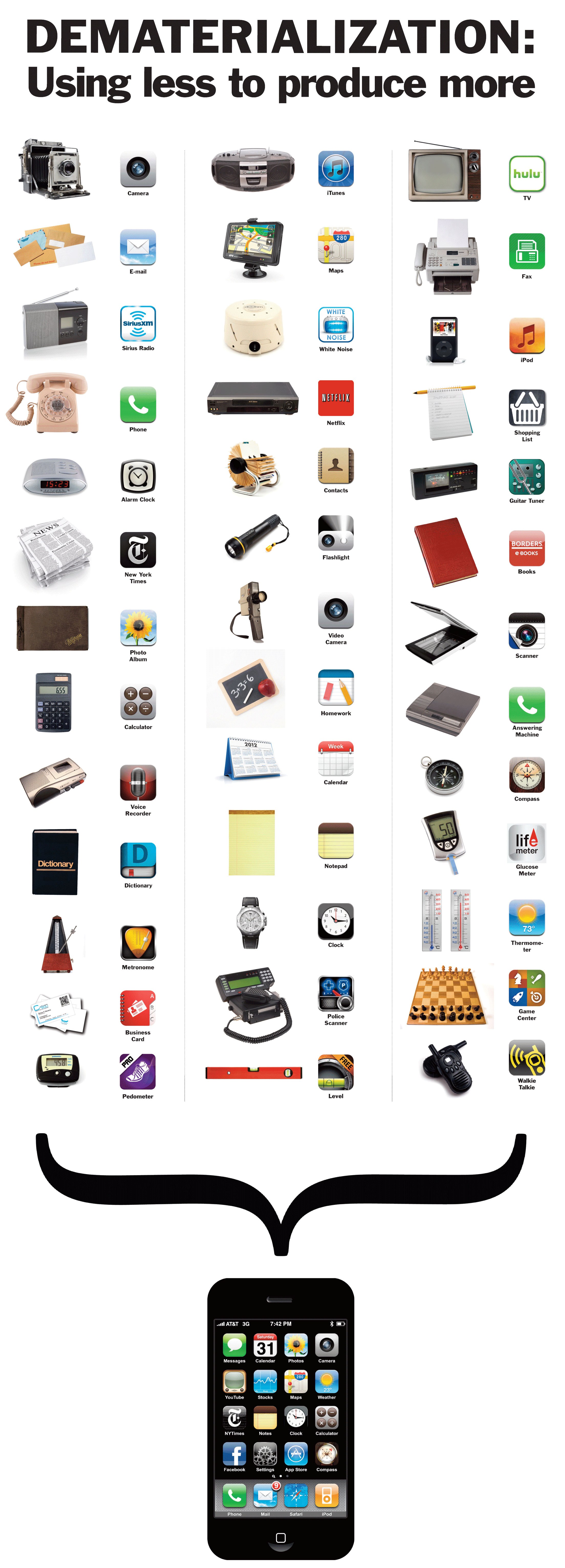

Whether you’re reading this article on a smartphone, tablet, laptop, or desktop computer, please take a moment to appreciate how incredible that device truly is. Ever more powerful, compact and affordable computers make our lives more convenient and connected than our ancestors could have ever imagined.

They also enable a process called dematerialization—they allow us to produce and accomplish more with less. The benefits to the economy, the environment, and human wellbeing are incalculable. If Moore’s Law holds true, regulators stay out of the way, and outdated privacy laws catch up to the current technological realities, then things are only going to get better.

This article first appeared in Reason.