Today marks the 49th installment in a series of articles by HumanProgress.org titled Heroes of Progress. This bi-weekly column provides a short introduction to heroes who have made an extraordinary contribution to the well-being of humanity. You can find the 48th part of this series here.

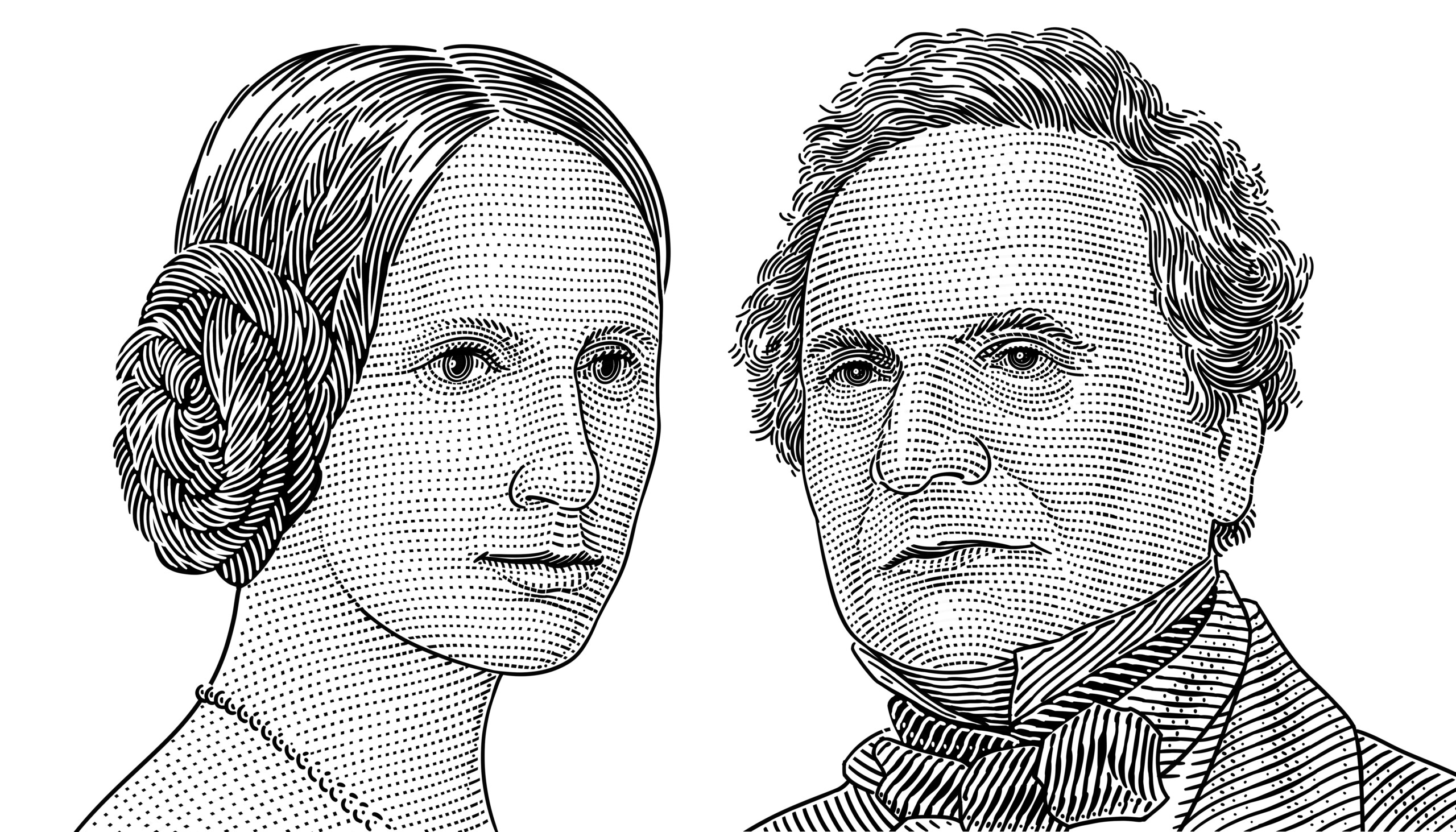

This week, our heroes are Charles Babbage and Ada Lovelace—two 19th century English mathematicians and pioneers of early computing. Babbage is often called “The Father of Computing” for conceiving the first automatic digital computer. Building on Babbage’s work, Lovelace was the first person to recognize that computers could have applications beyond pure calculation. She has been dubbed the “first computer programmer” for creating the first algorithm for Babbage’s machine. Babbage and Lovelace’s work laid the groundwork for modern-day computers. Without their contributions, much of the technology we have today would likely not exist.

Charles Babbage was born on December 26, 1791, in London, England. His father was a successful banker and Babbage grew up in affluence. As a child, Babbage attended several of England’s top private schools. His father ensured that Babbage had many tutors to assist with the latter’s education. As a teenager, Babbage joined the Holmwood Academy in Middlesex, England. The academy’s large library helped Babbage develop a passion for mathematics. In 1810, Babbage began studying mathematics at the University of Cambridge.

Before arriving at Cambridge, Babbage had already learned much of contemporary mathematics and was disappointed by the level of mathematics being taught at the university. In 1812, Babbage and several friends created the “Analytical Society,” which aimed to introduce new developments in mathematics that were occurring elsewhere in Europe to England.

Babbage’s reputation as a mathematical genius quickly developed. By 1815, he had left Cambridge and begun lecturing on astronomy at the Royal Institution. The following year, he was elected as a Fellow of the Royal Society. Despite several successful lectures at the Royal Institution, Babbage struggled to find a full-time position at a university. Throughout early adulthood, therefore, he had to rely on financial support from his father. In 1820, Babbage was instrumental in creating the Royal Astronomical Society, which aimed to reduce astronomical calculations into a more standard form.

In the early 19th century, mathematical tables (lists of numbers showing the results of calculations) were central to engineering, astronomy, navigation, and science. However, at the time, all the calculations in the mathematical tables were done by humans and mistakes were commonplace. Given this problem, Babbage wondered if he could create a machine to mechanize the calculation process.

In 1822, in a paper to the Royal Astronomical Society, Babbage outlined his idea for creating a machine that could automatically calculate the values needed in astronomical and mathematical tables. The following year, Babbage was successful in obtaining a government grant to build a machine that would be able to automatically calculate a series of values of up to twenty decimal places, dubbed the “Difference Engine.”

In 1828, Babbage became the Lucasian Professor of Mathematics at Cambridge University. He was largely inattentive to his teaching responsibilities and spent most of his time writing papers and working on the Difference Engine. In 1832, Babbage and his engineer Joseph Clement produced a small working model of the Difference Engine. The following year, plans to build a larger, full scale engine were scrapped, when Babbage began to turn his attention to another project.

In the mid-1830s, Babbage started to develop plans for what he called the “Analytical Engine,” which would become the forerunner to the modern digital computer. Whereas the Difference Engine was designed for mechanized arithmetic (essentially an early calculator only capable of addition), the Analytical Engine would be able to perform any arithmetical operation by inputting instructions from punched cards—a stiff bit of paper that can contain data through the presence or absence of holes in a predefined position. The punched cards would be able to deliver instructions to the mechanical calculator as well as store the results of the computer’s calculations.

Like modern computers, the design of the Analytical Engine had both the data and program memory separated. The control unit could make conditional jumps, separate input and output units, and its general operation was instruction-based. Babbage initially envisioned the Analytical Engine as having applications only relating to pure calculation. That soon changed thanks to the work of Ada Lovelace.

Augusta Ada King, Countess of Lovelace (née Byron) was born on December 10, 1815, in London, England. Lovelace was the only legitimate child of the poet and Member of the House of Lords, Lord Byron and the mathematician Anne Isabella Byron. However, just a month after her birth, Byron separated from Lovelace’s mother and left England. Eight years later he died from disease while fighting on the Greek side during the Greek War of Independence.

Throughout her early life, Lovelace’s mother raised Ada on a strict regimen of science, logic, and mathematics. Although frequently ill and, aged fourteen, bedridden for nearly a year, Lovelace was fascinated by machines. As a child, she would often design fanciful boats and flying machines.

As a teenager, Lovelace honed her mathematical skills and quickly became acquainted with many of the top intellectuals of the day. In 1833, Lovelace’s tutor, Mary Somerville, introduced the former to Charles Babbage. The pair quickly became friends. Lovelace was fascinated with Babbage’s plans for the Analytical Engine and Babbage was so impressed with Lovelace’s mathematical ability that he once described her as “The Enchantress of Numbers.”

In 1840, Babbage visited the University of Turin to give a seminar on his Analytical Engine. Luigi Menabrea, an Italian engineer and future Prime Minister of Italy, attended Babbage’s seminar and transcribed it into French. In 1842, Lovelace spent nine months translating Menabrea’s article into English. She added her own detailed notes that ended up being three times longer than the original article.

Published in 1843, Lovelace’s notes described the differences between the Analytical Engine and previous calculating machines—mainly the former’s ability to be programmed to solve any mathematical problem. Lovelace’s notes also included a new algorithm for calculating a sequence of Bernoulli numbers (a sequence of rational numbers that are common in number theory). Given that Lovelace’s algorithm was the first to be created specifically for use on a computer, Lovelace thus became the world’s first computer programmer.

Whereas Babbage designed the Analytical Engine for purely mathematical purposes, Lovelace was the first person to see potential use of computers that went far beyond number-crunching. Lovelace realized that the numbers within the computer could be used to represent other entities, such as letters or musical notes. Consequently, she also prefigured many of the concepts associated with modern computers, including software and subroutines.

The Analytical Engine was never actually built in Babbage’s or Lovelace’s lifetime. However, the lack of construction was due to funding problems and personality clashes between Babbage and potential donors, rather than because of any design flaws.

Throughout the remainder of his life, Babbage dabbled in many different fields. Several times, he attempted to become a Member of Parliament. He wrote several books, including one on political economy that explored the commercial advantages of the division of labor. He was fundamental in establishing the English postal system. He also invented an early type of speedometer and the locomotive cowcatcher (i.e., the metal frame that attached to the front of trains to clear the track of obstacles). On October 18, 1871, Babbage died at his home in London. He was 79 years old.

After her work translating Babbage’s lecture, Lovelace began working on several different projects, including one that involved creating a mathematical model for how the brain creates thoughts and nerves—although she never achieved that objective. On November 27, 1852, Lovelace died from uterine cancer. She was just 36 years old.

During his lifetime, Babbage declined both a knighthood and a baronetcy. In 1824, he received the Gold Medal from the Royal Astronomical Society “for his invention of an engine for calculating mathematical and astronomical tables.” Since their deaths, many buildings, schools, university departments and awards have been named in Babbage’s and Lovelace’s honor.

Thanks to the work of Babbage and Lovelace, the field of computation was changed forever. Without Babbage’s work, the world’s first automatic digital computer wouldn’t have been conceived when it was. Likewise, many of the main elements that modern computers use today would likely have not been developed until much later. Without Lovelace, it may have taken humanity much longer to realize that computers could be used for more than just mathematical calculations. Together, Babbage and Lovelace laid the groundwork for modern-day computing, which is used by billions of people across the world and underpins much of our progress today. For these reasons, Charles Babbage and Ada Lovelace are our 49th Heroes of Progress.