Today marks the 40th installment in a series of articles by HumanProgress.org called Centers of Progress. Where does progress happen? The story of civilization is in many ways the story of the city. It is the city that has helped to create and define the modern world. This biweekly column will give a short overview of urban centers that were the sites of pivotal advances in culture, economics, politics, technology, etc.

Our 40th Center of Progress is San Francisco during the digital revolution, when entrepreneurs founded several major technology companies in the area. The southern portion of the broader San Francisco Bay Area earned the metonym “Silicon Valley” because of the high-technology hub’s association with the silicon transistor, used in all modern microprocessors. A microprocessor is the central unit or engine of a computer system, fabricated on a single chip.

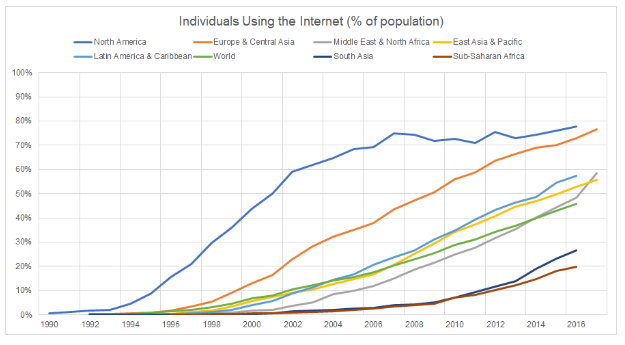

Humanity has long strived to develop tools to improve our survival odds and make our lives easier, more productive, and more enjoyable. In the long history of inventions that have made a difference in the average person’s daily life, digital technology, with its innumerable applications, stands out as one of the most significant innovations of the modern age.

Today the San Francisco Bay Area remains best known for its association with the technology sector. With its iconic Victorian houses, sharply sloping hills, trolleys, fog, Chinatown (which bills itself as the oldest and largest one outside Asia), and of course the Golden Gate Bridge, the city of San Francisco is famous for its distinctive views. As Encyclopedia Britannica notes, “San Francisco holds a secure place in the United States’ romantic dream of itself—a cool, elegant, handsome, worldly seaport whose steep streets offer breathtaking views of one of the world’s greatest bays.” Attempts to preserve the city’s appearance have contributed to tight restrictions on new construction. Perhaps relatedly, the city is one of the most expensive in the United States and suffers from a housing affordability crisis. San Francisco has in recent years struggled with widespread homelessness and related drug overdose deaths and crime. With both the country’s highest concentration of billionaires, thanks to the digital technology industry, and the ubiquitous presence of unhoused people, San Francisco is a city of extremes.

Today’s densely populated metropolis was once a landscape of sand dunes. In 1769, the first documented sighting of the San Francisco Bay was recorded by a scouting party led by the Spanish explorer Gaspar de Portolá (1716–1786). In 1776, European settlement of the area began, led by the Spanish missionary Francisco Palóu (1723–1789) and expeditionary José Joaquín Moraga (1745–1785). The latter is the namesake of San José, a city on the southern shore of San Francisco Bay, about 50 miles from San Francisco but located within the San Francisco Bay Area and the San Jose-San Francisco-Oakland Combined Statistical Area. San Francisco was the northernmost outpost of the Spanish Empire in North America and later the northernmost settlement in Mexico after that country’s independence. But the city remained relatively small and unknown.

In 1846, during the Mexican-American War, the United States captured the San Francisco area, although Mexico did not formally cede California until the 1848 Treaty of Guadalupe Hidalgo. At that time, San Francisco only had about 900 residents. That number grew rapidly during the California Gold Rush (1848–1855), when the discovery of gold turned the quiet village into a bustling boomtown of tens of thousands by the end of the period. Development of the city’s port led to further growth and helped the area become a hub in the early radio and telegraph industries, foreshadowing the city’s role as a leader in technology.

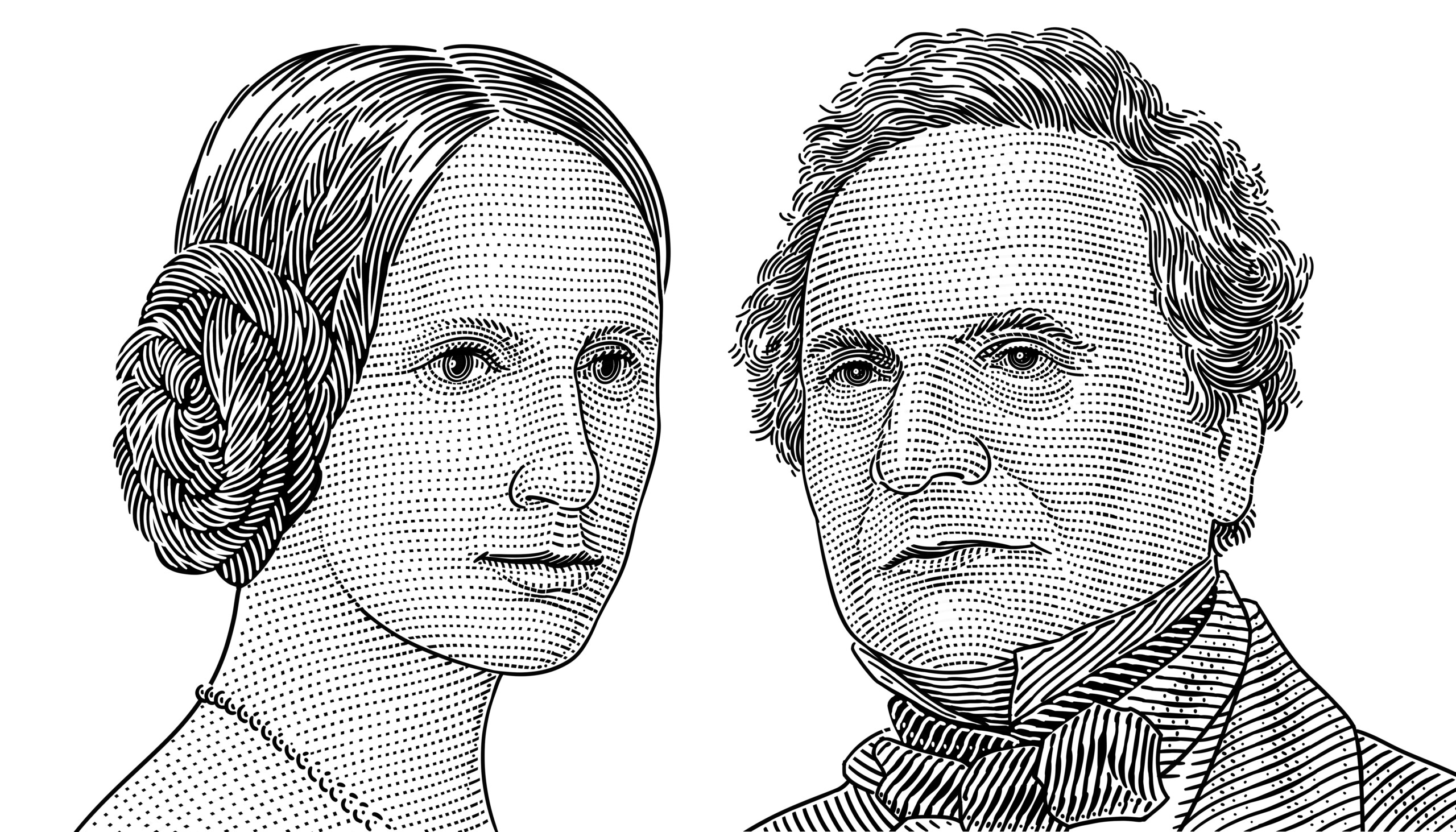

In 1906, three-quarters of the city was destroyed in a devastating earthquake and related fire caused by a gas line rupturing in the quake. The city rebuilt from the destruction and continued its growth, along with the broader Bay Area. In 1909, San Jose became the home of one of the first radio stations in the country. In the 1930s, the Golden Gate Bridge became a part of San Francisco’s skyline, and the city’s storied Alcatraz maximum security prison opened, holding famous prisoners such as the Prohibition-era gangster Al Capone (1899–1947). In 1939, in Palo Alto, just over 30 miles south of San Francisco, William Hewlett (1913–2001) and David Packard (1912–1996) founded a company that made oscilloscopes – laboratory instruments that display electronic signals as waves. They named the company Hewlett-Packard. During World War II, the company shifted to making radar and artillery technology. That field soon became linked to computing. That is because researchers at the University of Pennsylvania created a new tool to calculate artillery firing tables, among other tasks: the first general-use digital computer.

“Computer” was once a job title for a person who performed calculations. The first machine computer, named Electronic Numerical Integrator and Computer, or ENIAC, debuted in 1945. It cost about $500,000, or nearly $8 million in 2022 dollars, measured 8 feet tall and 80 feet long, weighed 30 tons, and needed constant maintenance to replace its fragile vacuum tubes. Back when computers were the size of a room and required many people to operate them, they also had about 13 times less power than a modern pocket-sized smartphone that costs about 17,000 times less.

San Francisco and Silicon Valley’s greatest claim to fame came with the dawn of more convenient and powerful digital technology. In 1956, the inventor William Shockley (1910–1989) moved from the East Coast to Mountain View, a city on the San Francisco Bay located about 40 miles south of San Francisco, to live closer to his ailing mother. She still lived in his childhood home of Palo Alto. That year he won the Nobel Prize in physics along with engineer John Bardeen (1908–1991) and physicist Walter Houser Brattain (1902–1987). The prize honored them for coinventing the first working semiconductor almost a decade earlier, in 1947, at Bell Laboratories in New Jersey.

After moving to California, Shockley founded Shockley Semiconductor Laboratory, the first company to make transistors and computer processors out of silicon—earlier versions used germanium, which cannot handle high temperatures. His work provided the basis for many further electronic developments. Also in 1956, IBM’s San Jose labs invented the hard-disk drive. That same year, Harry Huskey (1916–2017), a professor at the University of California, Berkeley, some 14 miles from San Francisco, designed Bendix’s first digital computer, or the G-15.

Shockley had an abrasive personality and later became a controversial figure due to his vocal fringe views related to eugenics and mass sterilization. In 1957, eight of Shockley’s employees left over disagreements with Shockley to start their own enterprise together with investor Sherman Fairchild (1896–1971). They named it Fairchild Semiconductors. Shockley called them “the Traitorous Eight.” In the 1960s, Fairchild Semiconductors made many of the computer components for the Apollo space program directed from Houston, our previous Center of Progress. In 1968, two of the “Traitorous Eight,” Gordon Moore (b. 1929) and Robert Noyce (1927–1990), the latter of whom earned the nickname “the Mayor of Silicon Valley,” left Fairchild to start a new company in Santa Clara, about 50 miles southeast of San Francisco. They named it Intel. Moore remains well-known as the creator of Moore’s Law. It was he who predicted in 1965 that the processing power of computers would double every 18 months.

In 1969, the Stanford Research Institute at Stanford University, some 35 miles southeast of San Francisco, became one of the four “nodes” of Advanced Research Projects Agency Network. ARPANET was a research project that would one day become the internet. In 1970, Xerox opened the PARC laboratory in Palo Alto, which would go on to invent ethernet computing and graphic user interfaces. In 1971, journalist Don Hoefler (1922–1986) published a three-part report on the burgeoning computer industry in the southern San Francisco Bay Area that popularized the term “Silicon Valley.” The pace of technological change picked up with the invention of microprocessors that same year.

Just as the 19th-century Gold Rush once attracted fortune seekers, the promise of potential profit and the excitement of new possibilities offered by digital technology drew entrepreneurs and researchers to the San Francisco Bay Area. In the 1970s, companies such as Atari, Apple, and Oracle were all founded in the area. By the 1980s, the San Francisco Bay Area was the undisputed capital of digital technology. (Some consider the years from 1985 to 2000 to constitute the golden era of Silicon Valley, when legendary entrepreneurs such as Steve Jobs (1955–2011) were active there.) San Francisco suffered another devastating earthquake in 1989, but that was accompanied by a relatively small death toll. In the 1990s, companies founded in the San Francisco Bay Area included eBay, Yahoo!, PayPal, and Google. The following decade, Facebook and Tesla joined them. As these companies created value for their customers and achieved commercial success, fortunes were made, and the San Francisco Bay Area grew wealthier. That was particularly true of San Francisco.

While many of the important events of the digital revolution took place across a range of cities in the San Francisco Bay Area, San Francisco itself was also home to the founding of several significant technology companies. Between 1995 and 2015, major companies founded in or relocated to San Francisco included Airbnb, Craigslist, Coinbase, DocuSign, DoorDash, Dropbox, Eventbrite, Fitbit, Flickr, GitHub, Grammarly, Instacart, Instagram, Lyft, Niantic, OpenTable, Pinterest, Reddit, Salesforce, Slack, TaskRabbit, Twitter, Uber, WordPress, and Yelp.

San Francisco helped create social media and the so-called sharing economy that offers many workers increased flexibility. By streamlining the process of such things as grocery deliveries, restaurant reservations, vacation home rentals, ride-hailing services, second-hand sales, cryptocurrency purchases, and work group chats, enterprises based in San Francisco have made countless transactions and interactions far more convenient.

New technologies often present challenges as well as benefits, and the innovations of San Francisco along with Silicon Valley are certainly no exception. Concerns about data privacy, cyberbullying, social media addiction, and challenges related to content moderation of online speech are just some of the issues attracting debate today that relate to digital technology. But there is no going back to a world without computers, and most would agree that the immense gains from digital technology outweigh the various dilemmas posed by it.

Practically everyone with access to a computer or smartphone has direct experience benefiting from the products of several San Francisco companies, and the broader San Francisco Bay Area played a role in the very creation of the internet and modern computers. It is difficult to summarize all the ways that computers, tablets, and smartphones have forever changed how humanity works, communicates, learns, seeks entertainment, and more. There is little doubt that San Francisco has been one of the most innovative and enterprising cities on Earth, helping to define the rise of the digital age that transformed the world. For these reasons, San Francisco is our 40th Center of Progress.